Historian Niall Fergusson argues that under most scenarios World War One was not inevitable. Rerunning multiple simulations of the crisis of July 1914 usually does not end with a world war. “The real lesson of history,” writes Fergusson, making a link between Bosnia Herzegovina in 1914 and eastern Ukraine 100 years later, “is that a relatively small crisis over a chunk of third-rate eastern European real estate will produce a global conflict only if decision makers make a series of blunders.”[1]

Fergusson is looking back and saying, look what else could have happened. But mostly we look back and we figure out that X led to Y lead to Z and we end up lining events up in a narrative that might be haphazard but is coherent enough. We can make the pieces fit. If we are re-living a heroic victory we usually overemphasise our planning and skill that made everything fall into place. If, however, we are deep in failure our post-mortems are likely to wonder about what might have been. Where did we get it wrong? It is all ‘what-ifs’ and if-onlies’.

This is the world of the commission of inquiry. A disaster happens, an inquiry is established, the evidence is submitted and examined, witnesses are cross examined, and gradually it is revealed that is it not just one thing but a whole string of coincidences, mistakes, confusions, acts of commission and omission that led to calamity. Many of the errors may be egregious. Individuals and organisations may be culpable. Others will be systemic – arising from the working of the system. The sociologist Charles Perrow called these “Normal Accidents”. In complex systems there are so many interacting parts and so many emergent possibilities that multiple events combine in ways that could not have been foreseen. This outcome is normal – it lurks in the system as a possibility waiting for events to combine.

This is not to say that we should not strive to be responsible and accountable and to do as much as we can to make things better or avoid blunders that make things much worse and can harm and kill people or destroy environments or communities. It is to say that we overrate our abilities to control things and, in doing so, we often design strategies and take actions that over reach or lull us into complacencies.

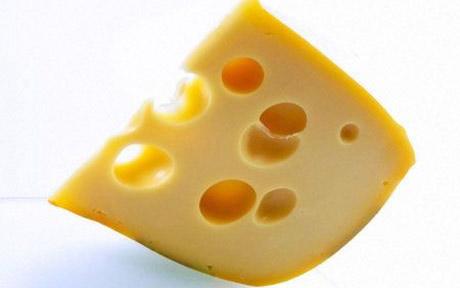

The Swiss cheese model is used to counter this tendency in risk analysis and management in high-reliability sectors such as aviation safety, engineering, and health care. The assumption is that each layer of defence in the system has weaknesses or flaws, like the holes in a slice of Gruyere. If the holes in multiple slices or defences happen to line up a disaster can be the emergent feature of the system. The model prompts us to look for ways that weaknesses might coincide and to design in redundancies to manage for this.

Some of the best-known and most revealing examples of complex systems are found in the pages of commissions of inquiry. These offer useful teaching examples. The tragedy has been all over the media and now, in retrospect, the chain of causation is clear: X forgot to do A, and then Y did not tell B that X’s steps had not been followed; and Z misread the situation because she was expecting things to be completely regular as they had almost always been.

I have often used examples drawn from accounts of these inquiries. They are excellent for making clear how contingent and non-linear things are, how little things can add up to big consequences, even in systems where we assume all things are predictable and under control. But there is a way this teaching does not work. It fails a test of immediate relevance. Most of us are not involved with crises that end up being the subject of an official inquiry. These are big things. Usually many people died. Leaders end up feeling that these examples exist outside of their world, they happen to other people, over there. When I have used tragedies with which I have had some connection, where I can speak personally about some aspect of the organisations involved, it seems to impress the audience and it also distances them from the topic and from me.

The personal is pedagogical, to mangle a slogan from feminism. The question we have been grappling with is whether there are more personal ways to learn about complexity by exploring how it occurs in our daily lives.

[1] “In History’s Shadow”, FT Weekend, 2-3 August 2014, p.5.

Hi Keith and Jennifer

Thank you for your great blogs on complexity. In terms of personal ways to learn about complexity, I am dealing with a painfully difficult, unexpected family issue and searching for a transformative way of dealing with it – in many ways my journey in this mirrors the communication difficulties you are inquiring about. To add to the slogans , a great therapist once said (maybe quoted) that what is most personal is most universal . In terms of learning, family is pretty personal.. I already feel after reading these blogs especially ” not finding the heart of the matter” a greater spaciousness in my thinking and new ideas about how to approach things. Looking forward to more explorations. Warm regards Kate